Bots Scrape News Sites to Generate... (fake) News Sites

Earlier this week I read an article in Het Financieele Dagblad (fd.nl) a Dutch financial website [2][3]. It was about bots scraping their content in order to build a vast dataset upon which large language models can be trained. The first paragraph ends with ‘Attempts to block websites don’t suffice’ and a bit further in the article again it is iterated that it’s ‘technically difficult to shield websites against bots’, and ‘they discovered that bots copy articles protected with a paywall’.

This doesn’t surprise me at all. Some bots have become sophisticated and without specialized software they are indistinguishable from normal human visitors. When the stakes are high, the potential profit is enormous, and when you’re able to pay a thousands of $ a month to hire a team of specialists able to make and continuous update the best content scraping bots known to mankind, then.. then you’re king of your bot-army. Ah, and for those wondering: These bots will happily ignore the robots.txt, terms and conditions, etc.

Content scraped from millions of news articles end up in LLMs (Large Language Models) like ChatGPT, Bard and a zillion lesser known LLMs. These models, once trained, make big bucks without any compensation to the original content writers and owners. To make it even more bitter “copycat websites” use bots to scrape the content from news sites, top publishers, then rewrite the content as an “rewritten by an LLM”-version on their “news websites” (read: MFA news websites[8]), place ads on these websites to monetize the “hard work of the bots”, as can be read in this Bloomberg News article [4][5]. These content farms don’t add value, on the contrary they host a plagiarized version of the news, fake news and/or just clickbait, only and only to lure people (and don’t worry: also bots) to their websites where these ads are shown. The reason why they use LLMs to rewrite the articles is that it’s hard to detect plagiarism using automated checker tools. Ironically, these LLMs (re)generating copycat news articles are trained on the content from the very same news websites.

The bills and *cough* hard work of these copycat websites are indirectly paid by advertisers. By having lots of advertisement slots available, they promise advertisers and agencies the world! Luckily, the URLs of these websites will be relatively new and aren’t relevant reputation wise. So, they can typically be found in the so called “long-tail” of websites. Exactly where your advertisements shouldn’t be placed. One more reason to use an inclusion whitelist of domains where your ads are allowed.

Web Archiving

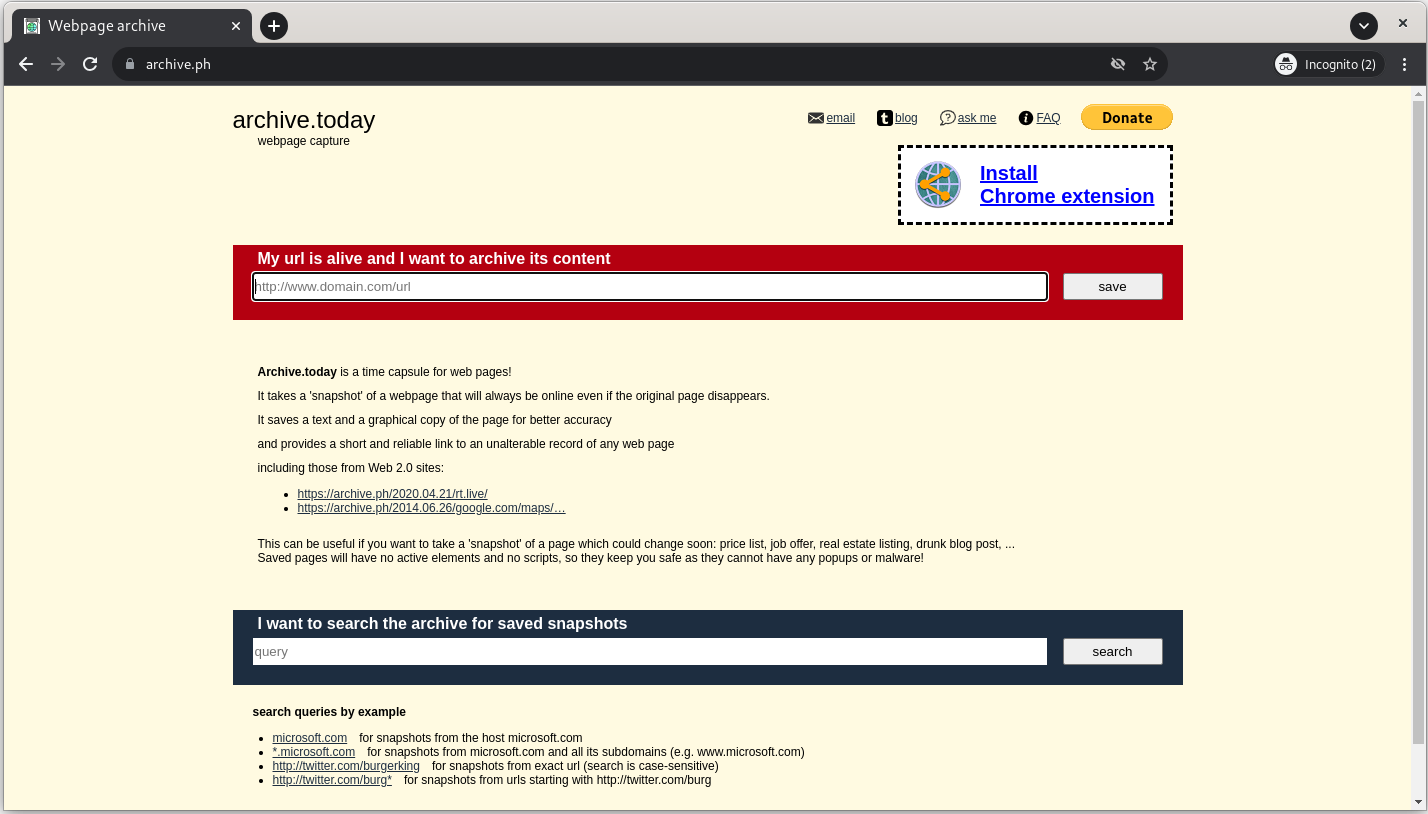

Another form of content stealing is done by archive.today [1]. The website claims to be a time capsule for webpages. At a very first glance a very noble mission statement! Except that this service can be used to capture content from news publishers, paywalled websites, etc. and once captured and archived the articles can immediately be accessed free of charge, even articles behind a paywall.

Be able to achieve this implies archive.today has subscriptions to most of the newspapers, magazines, and not just limited to the fd.nl but also ft.com, nytimes.com, wsj.com, reuters.com, bloomberg.com, also many other non-financial news websites and also in many other languages. Try it for yourself and go to https://archive.today with an URL of an news article behind a paywall, copy/paste the URL in the dark blue search bar to search the archive. If the URL has not been archived yet, you can request their bots to make a capture of the URL by copy/pasting the URL in the red bar.

This implies that all of these publishers and news websites don’t have any bot-detection present. And if they do, clearly it doesn’t work!

So, how does the archive.today bot work? And with this one many others, because most bots share 95% of the technology stack, only the remaining 5% makes it either an easy-to-detect bot, or a pain-in-the-neck bot. To validate this, I requested a few URLs to be added to the archive. To make my life easier I added an unique suffix to each URL to recognize the specific request made by the bot. The websites hosting these URLs contain Oxford Biochronometrics ’ fraud detection technology and thus I should be able to isolate this “visitor”.

How do their bots look from Oxford Biochronometrics ’ perspective? All recorded visits by their scraping bot used a similar technology stack:

Based on the IP addresses the bots all reside in a data center, though each in a different country in my small sample test

The used User Agent was: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36

The browser engine used was indeed a true Chrome/92 browser. If it were a spoofed userAgent, the detection would have flagged it because of that. Spoofing is a red flag!

The navigator.webdriver was not existent, in a real Chrome/92 this value should be ‘false’, or ‘true’ when the browser is being controlled remotely. Again a red flag!

The list above can be seen as a low hanging fraud checklist [6] and contains a lot of useful information. The first bullet point shows that the bot resides in data centers, probably all around the world. The first question you should ask yourself is: Why are data center visitors allowed to read premium articles? Which visitors would ever visit your web site from a data center? The 24/7 monitoring and maintenance team? The only possible reason I can think of is VPN users. That’s because VPN endpoints reside in data centers ensuring high bandwidth and low latency from/to the Internet.

Chrome/92 was released in July 2021 [7]. That’s over two years ago! The percentage of vistitors using this browser in Sep 2023 on a desktop is near zero, and if a Chrome/92 visitor is coming from one or more data centers, all sharing the same membership account, you know what to do! Another question of course is: Why hasn’t archive.today updated this browser to a more modern Chrome version? Simply, because it still works!

The forth bullet point would be a technical check. This should be validated in the browser using Javascript at the moment the webpage is loaded and accessed. This shows that no bot detection is present at these web sites, as this is the one of the most basic checks which reveals the browser IS a bot. BTW. It’s also very easy for the bot to have this flag set to the value ‘false’, so don’t rely on this too much! Besides this technical checks more clues are available in the data that reveal this visitor is a bot. This also accounts for other bots from other ‘indexing’ or ‘scraping’ services. Making a bot using browser automation isn’t that hard. The part where it becomes difficult is: How to make the bots blend in AT SCALE! That’s the hard part.

Because subscriptions are used to access paywalled and/or premium articles, a simple analysis can be run: which accounts accesses all our articles, how often, 24/7, and what percentage of those visits comes from a data center? And data centers in multiple countries? To know which IP ranges are data centers: Databases with metadata and information per IP address range can be bought, and once bought they should be updated monthly, to include newly bought data centers IP ranges.

The blog started with some quotes: ‘Attempts to block websites don’t suffice’, ‘technically difficult to shield websites against bots’, and ‘they discovered that bots copy articles protected with a paywall’ from the fd.nl article talking about bots scraping their content. Apparently, it appears that with some reasoning, some basic analytics and some IT knowledge it isn’t that difficult to spot bots. So, how to fight these bots?

Analytics, analytics and analytics.

Without knowing who is loading your content, what devices are visiting you, where your visitors are coming from you will always be guessing. To be able to separate your legitimate audience from bots and fraud you’ll have to slice the data, segment it into groups. Having read this article you’ll know what to look for, it’s fairly simple, see the 4 point checklist above.

Once bots are blocked, it is just a matter of time until they adapt and/or update their technology stack. It would be wishful thinking to think that they just disappear. So, once adapted they should reappear in the analytics. Where? Why? Because, as mentioned before: It’s hard to make bots at scale and blend in with the regular human audience. Due to the large scale these bots have to visit your website it automatically becomes a group of statistical outliers and thus will be visible in the analytics. The underlying question is: What makes them unique this time? And next time? And the next-next time? etc.

Use common sense

Although, I do see the added value of an Internet archive or an Internet Museum preserving content, screenshot webpages and archive entire web sites, at this moment the service is abused to read premium content for free. The simplest and cleanest way without affecting news publishers (and many others) too much and still adhering to their mission would be: a 30 day non-viewing period for newly archived web pages.

In the other case where fake news websites are launched either to spread fake news based on real actual content, and/or to monetize visitors using advertisements. These bots will work similar to the archiving bot described above. Will they adapt when data center IP ranges are blocked? Yes, they will. Will they start using residential proxies? Yes they will, but at a price: > $2 per Gigabyte. Once you add a bot detection layer and make their life even more miserable, the price of running and continuously updating an infrastructure with scraping bots will again increase.

The goal is: Make it economically unfeasible! Advertisements will not provide that much profit to cover all these expenses, especially when advertisers start using common sense and avoid advertising on MFA sites by using domain whitelists, and also whitelists of Apps since this phenomenon surely will shift -or already exists- to fake mobile news apps.

In the end it is all about common sense and the use analytics to see where and how the scraping bots move over time. Content isn’t a high-value product like limited Nike sneakers, Taylor Swift tickets, NFL tickets, etc. so making it economically unfeasible is doable and will work.

Questions? Comment, connect and/or DM

#adfraud #fakenews #digitalmarketing #MadeForAdvertising

[1] https://en.wikipedia.org/wiki/Archive.today

[2] https://fd.nl/bedrijfsleven/1490101/nederlandse-media-willen-plundering-door

[3] https://archive.ph/hCzVe (archive.today archived version of article at [2])

[5] https://archive.ph/LpnlL (archive.today archived version of article at [4])

[6] https://www.linkedin.com/pulse/data-exploration-how-help-you-catch-low-hanging-fraud-kouwenhoven

[7] https://support.google.com/chrome/a/answer/10314655?hl=en

[8] MFA (Made for advertising), https://en.wikipedia.org/wiki/Scraper_site#Made_for_advertising