Foundations of Fighting Ad Fraud

Part I

Ad fraud. Collectively costing the industry billions/ year, and apparently nobody gives a sh*t. The self-proclaimed and self-certified ‘defenders of the ecosystem’ continuously under-report the problem to the extent of willful ignorance. That makes one wonder: Why? Who profits from this? Where do these billions converge to? And what can be done about this?

A few weeks ago I read a LinkedIn post by John James and he referred to WSJ columnist’s Jason Zweig’s three-part rule [15]:

There are three ways to make a living:

Lie to people who want to be lied to, and you’ll get rich.

Tell the truth to those who want the truth, and you’ll make a living.

Tell the truth to those who want to be lied to, and you’ll go broke.

Certain ad fraud verification companies would clearly fit the 1st category. Those companies “detecting and verifying” fraud supposedly can’t tell you how they do it, because disclosing their secrets would help fraudsters to improve. But, they do send their verification JavaScript to millions of devices, including fraudsters! How does that rhyme? Anyone able to reverse engineer their JavaScript (and that ain’t so hard) knows exactly what data they are collecting to determine fraud. Fraudsters know how to reverse engineer, or hire someone who knows how to. Fraudsters know what data is relevant to detect fraud and actively try to counter it.

Companies hiring fraud verification companies are not IT security companies who can do their own pentesting or code analysis. These companies are large companies (S&P 500) that advertise digitally to promote their products or services across various channels: CTV, programmatic, PPC, social media, email, etc. Apparently, they blindly trust these verification companies. Based on the allegedly percentage of detected fraud, ie. reported is < 1%, one would wonder why they do even verify their digital spends. If there isn’t a big problem, why spend so much money on it? Is it just to check the checkbox in order to be able to claim: We are compliant? If so, that’s probably that company’s most expensive checkbox.

The opposite of ‘ignorance is bliss’ is: Knowledge is power. Having knowledge means being in control. The majority of people working in the digital marketing ecosystem don’t have a master in computer science. Hence, they don’t fully understand what is technically possible, feasible, tell the difference between a poor and a good quality ad fraud verification solution, are easily intimidated with technical terms. Unable to ask the right questions, and without having sound knowledge to see through false promises, makes you indeed the party being lied to of the 1st way to make a living.

Now, you might think: “Whatever...” or “Who cares? That’s the price of doing business, as long as I reach my audience.” But, there are more important things than you reaching your audience. For example, elections. According eMarketer the total US political ad spending will hit $12.32 billion in 2024, up nearly 29% from the prior presidential election in 2020 [16]. Burning only one party’s budget using a botnet preventing to reach humans does make a difference in swing states. And I’m sure that you don’t want your digital marketing budget being spent on funding fake news websites, misinformation in order to change the outcome of elections, indirectly fund bad regimes, or just spend on anything else than your campaign goals.

So, how will this blog series help you? By trying to explain and educate you on the foundations of fraud detection. What types of detections exist, what is possible to achieve and what is not possible. It will show you real scenarios with real output. You don’t have to fully 100% understand everything as long as you know whom to invite to ask these questions during your fraud detection vendor selection process once your contract expires or you’re not satisfied with the current one and you’re going to select a new vendor.

You might think that this post would also educate fraudsters. Yes, maybe the script-kiddies, or students. But, rest assured, the professionals already know the content of this blog by heart. It’s their living.

Oxford Biochronometrics focuses on detecting fraud in lead generation based on human interactional behavioral analysis, but that doesn’t mean we’re not able to detect ad fraud. That’s why this blog describes all fraud detection mechanisms from top to bottom of the marketing funnel. It starts with pre-bid, impressions and video ads where tracking pixels are used. Subsequently, two distinct classes of fraud detection using JavaScript are described. The last part is about fraud detection based on how the application is used by looking at the interaction.

The next part of this series will cover how analytics of the fraud detection data can be transformed in to actionable data.

Ad Fraud detection: The techniques

There are two main types of ad fraud detection at the client side where the browser is involved: Request based aka tracking pixel, and JavaScript based. In Figure 2 can be seen that JavaScript can logically be split in three groups, where each extracts data based on a different extraction method. Besides the data conveyed by the JavaScript also technical features of how the data was sent, ie. TLS fingerprint, network packets, are captures and used to determine ad fraud. All these layers of detection create a complete picture of the client side, the transport and whether that smells like fraud or not.

Technique 1: Tracking Pixel

A tracking pixel, technically an <img> tag in HTML, makes a request to the bidder’s server when a browser loads an advertisement that includes such a tracking pixel. Loading the pixel means that the browser requests and downloads the 1x1 pixel. It is not about displaying the pixel, but about conveying data. That’s why the browser’s requests contains a payload that will contain data such as names or ids of campaign, source, and media details in order to tie back the pixel to the advertisement and make the data actionable.

a video advertisement is loaded

at video completion percentages (25%, 50%, 75%, 100%)

at specific pages to track conversion and/or transaction completion

In order to get a better feeling of what REALLY happens, let’s take a look at Figure 3. It shows how the browser sends such a request, its headers, its payload, the size, the response, etc.

In Figure 3 three purple boxes can be seen. The box with number 1 contains the full URL of the request, where the payload is everything after the question mark. The data is encoded in protobuf format [4]. The box with number 2 shows a positive response: status code 200. The box with number 3 shows that an 1x1 image is returned.

But, as you would expect: Simple pixel requests by a browser can be faked!

Let’s show you how that can be achieved.

The ‘Copy as cURL’ in Figure 4 shows how to generate a full cURL request just like the browser did. The request includes all headers, eg. user agent, referrer, and also all custom headers. This will be copied onto the clipboard, as text. This can be copied to a terminal box and pasted and executed, which can be seen in Figure 5. The only addition I had to make is the --output gen_204.gif, see 3 in Figure 5, because images are binary and thus require a filename.

As you can see both the request by the browser and the request by cURL from a command prompt were accepted by the web server, both times the server returned a HTTP status 200. The status cannot be seen in Figure 5, but running the same command in verbose mode shows the status: HTTP/2 200

This cURL example also shows that a browser is nothing more than a serial HTTPs request machine with some additions, like: session cookies, persistent storage, scripting, APIs, a GUI, an interface. These are important for humans, but not for bots! On the contrary, when committing ad fraud they are expensive in terms of CPU and memory.

Pre-bid

Opening a webpage with advertisements means that your browser starts a sequence of events in order to show these advertisements. The initial event is pre-bidding, aka header bidding. This enables SSPs and exchanges to bid on an ad-slot in a browser. Each of the available advertisements has a price range (min. and max. price), and the one with the highest bid within this range will win the auction and is thus being displayed in the ad-slot.

As you can see in Figure 6 a pre-bid request is, just like a pixel, a simple HTTP(s) request. This means it can be generated from the command line as well, just as easy as the previous example. Fraudsters love command lines, because it is CHEAP and SCALES well. Ever tried to start and open 50 Chrome browsers on your computer? Now try the same with 50 terminal boxes and requests, that’s very doable. It becomes even better when a single terminal box with a program that starts and fires 50 concurrent GET requests. That’s so lightweight, you wouldn’t even notice running this on your laptop or PC.

In a browser you will be using the JavaScript’s implementation of pre-bid. This piece of code will fire prebid requests based on the web page's configuration and configuration on behalf of advertisers, publishers, and middlemen in the ecosystem. The current prebid code size is 176kByte [8] and that’s the minified JavaScript! Although the size is large, AFAIK, it doesn’t do any security checks or data collection on behalf of ad fraud verification or detection. The alleged checks are done server side during pre-bidding, but what these checks and validations exactly are? Maybe ask Baghdad Bob?

Having tested firing prebid requests programmatically using cURL as simple test and Python to create more complex and fully configurable flow of prebidding and loading ads and firing pixels does actually work. In a separate future post I will show how this works step by step and how you can emulate a browser, or pretend to be com.some.arbitraty.random.app doing a prebid, capture the returned answer, load advertisements and fire completion pixels. All without a browser only using Python code and a series of requests. And what I can do without being paid, fraudsters are able to do as well; though they are getting paid, indirectly through companies' digital marketing spend.

CTV

In CTV (connected TV) advertisments can also be requested using pre-bid [6][7]. In CTV they are used to place bids on the available pods (commercial breaks). While the advertisements are shown completion pixels are fired at 25%, 50%, 75% and at 100% completion. I keep repeating myself, but technically these pixels can simply be generated programmatically and are similar to pixel requests.

In CTV no JavaScript can be executed to verify the client’s device and application. This means that the only information available at the verification side to make a decision upon is the incoming request and its payload.

Streaming music, podcasts, and more

Streaming platforms offer music, podcasts, video. About two weeks ago I read a nytimes article about a man that generated tons of new music using AI, placed this music on the platform, and streamed those tracks using bots [9]. He allegedly played his tracks BILLIONS of times using bots. Curiosity killed the cat, and greed killed this one! To me it implies those platforms didn’t have any fraud detection at all, that's why it persisted for such a long time at $110k/month!

A few years back I had a Deezer subscription, which allowed me to listen to music in lossless. Normal usage would be my browser or the Deezer App connecting to the streaming platform and listen to the music. Technically listening to music is simply a series of requests from my device to the platform which returns you some audio based on the request’s payload (ie. IDs, artist, album, song, etc).

An application called Deemix allows you to download audio from streaming platforms and store them as files on your computer. This enables you to make a local copy and/or collection of albums. It also downloads music much faster than the normal listening speed. Downloading 10 albums is a matter a seconds. This confirms that no fraud detection mechanism has been implemented. Why? The next section will explain why.

Technique 1: Tracking Pixel -- Fraud detection

So, how can ad fraud be determined purely on requests? The first question would be: What data is available to make a decision upon? In case of requests that’s not really much; Only data associated to the request:

The conveyed payload querystring or HTTP post data

HTTP headers such as: user agent, sec-ua headers, origin, referrer, cookie, x-forwarded-for, etc.

IP address

Network packets, eg. TLS handshake fingerprint, TCP fingerprint

The first two are generated by the browser, and as a fraudster this can be emulated 100% with a few lines of code; You only have to capture some real session data and replay the session, see figure 4 and 5.

Self declared bots don’t change their true user agent. They are considered ‘good bots’ and are often allowed to access the content of web sites. Simply because based on the those bots and their work new customers using search engines will find your website. Examples of self declared bots are:

'DuckDuckBot-Https/1.1; (+https://duckduckgo.com/duckduckbot)'

Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/100.0.4896.127 Safari/537.36

Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/127.0.6533.99 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.4 Safari/605.1.15 (Applebot/0.1; +http://www.apple.com/go/applebot)IAB Techlab continuously updates a list of known spider, crawler and other known bot user agents, which is available at an annual fee [18]. This list would be the low hanging fraud layer to flag and detect fraud by known bot user agents and thus preventing a bot to successfully prebid and load an advertisement.

Self declared bots typically run in data centers and don’t use residential proxies to hide their IP address. Using a (block)list with IP subnets of data centers would be an additional layer to prevent ads being served to traffic (bots) originating from data centers. They can still be allowed to load the page in order to scan content, but without the ads.

Fraudsters running their bots in a data center to load advertisements will have to change their IP address using residential proxies at an additional cost.

The TLS fingerprint is not hard to change, but requires more technical knowledge. But, more importantly: It prevents easy scaling as different applications and thus browsers (sometimes per version) have different TLS fingerprints. In CTV different TV brands and sticks do have different TLS fingerprint values, differing from version to version. This prevents fraudsters from just simply spoof a user agent (UA), because the combination between UA and TLS fingerprint has to match. It also requires a constant validate and when needed update their list of fingerprints, as new devices, updates of software on those devices, and new browser versions are released monthly.

To illustrate this let’s show you the difference between programmatic requests and real devices used by humans. Figure 8 shows the TLS fingerprint (based on JA3 hash) for different versions of Firefox. Only the last 4 characters are shown per fingerprint and as can be seen the JA3 fingerprint does not change very often. Version 115 and 116 share the same fingerprint, and since version 117 every Firefox release has had the same TLS fingerprint (based on JA3 hash).

But, there’s TLS fingerprint trouble on its way. Chrome started to randomize the order of the ciphers since version 110. The request application cURL exchanged OpenSSL for LibreSSL from version 8.4.0_9 onwards and as a result its TLS fingerprint started to appear as a random value. Figure 9 shows that versions prior to 8.4.0_9 share the same fingerprint (blue), and newer versions show a random fingerprint (red). As cURL is open source some OSes, eg. Windows 10, have their custom compiled version of cURL, and in the case of Windows the cURL fingerprint is static.

Other request based libraries and/or applications have other fingerprints, most of them are relatively static and resemble the fingerprints of the underlying libraries. But, it is just a matter of time before they start appearing as randomized fingerprints.

When applications, like browsers, started randomizing their TLS fingerprint (the JA3 Hash) it made it harder for fraud detection tools to automatically flag and/or block requests. Blindly blocking unknown or unassociated TLS fingerprints may cause a lot of false positives. For example, at large companies your browser on your workstation isn’t able to directly connect to the web sites on the Internet, because there’s a proxy server in between. This proxy server decrypts your secure session, checks the data going back/forward (malware detection, scanning for sensitive company information to be leaked, etc.), and re-encrypts the session to communicate with the web server. The fraud detection at the receiving end will see the TLS fingerprint of the proxy server, which is completely different compared to the fingerprint associated to your browser’s user agent. Automatically flagging without understanding what you're flagging can be a recipe for false positives! If you work in a corporate network and certain sites are randomly blocking you, or harassing you with CAPTCHAs without reason, now you know why!

cURL impersonate

In order to emulate the behavior of real browsers fraudsters have started using special versions of cURL. cURL impersonate [13] is such a version and enables you to emulate the TLS handshake of a real browser. This means that looking at the JA3 hash requests appear to be from genuine browsers. This enables fraudsters to pretend to be a popular browser, and spoof its associated fingerprint. Request based fraud is cheap and easy to scale and making the requests look like normal browser requests is the icing on the cake for fraudsters.

What does this tell us? TLS fingerprint enables fraud detection solutions to determine whether the application is lying about its appearance. Fraudsters spoofing the TLS fingerprint to match the user agent are invisible at this detection level. Randomization of a TLS fingerprint makes browsers stand out because of their uniqueness. Though, at the detection side you can still avoid this by countering the randomization, eg. using sorting, though it is less granular, it is somewhat effective.

So, if an application knocks on the digital door of your Ad server, CTV, API, or music streaming platform and its hash shows that it is a known hash of an old cURL (or: wget, python, golang, electron, jdownloader, deemix, etc) version pretending to be a popular browser you should be aware of that. You might block them by dropping the request, or send them to a slow web server, etc. But, in the end, you should be aware of it and the number of (dropped) bot requests should be in your monthly usage statistics.

Spoofed TLS fingerprints generated by a modified versions of cURL do make TLS fingerprinting ineffective. There are no direct solutions to this, only indirect ones, and by having multiple detection layers, such as: JavaScript.

Technique 2: JavaScript -- Fraud detection

JavaScript can be used to execute your code in order to probe, read and convey information from a browser to the fraud detection server. A common architecture is that the fraud detection the server will make the fraud decision based on the data the client conveyed. In order to make that decision three different classes of data can be collected from the browser.

The three classes of data collection in JavaScript are:

Direct. Reading properties from the browser’s configuration and Web APIs

Challenge/ Response. This providing a (random) challenge, where the browser generates a response to that specific challenge

Human behavior. Recording human interactional data like scrolls, clicks, window resize, visibility, etc.

The paragraphs below contain a description and detailed examples for each class.

JavaScript Class 1: Extracting properties

Every browser has different a different configuration, its unique settings based on the hardware, environment and operating system. These can be read by JavaScript code executed in the browser. The combination of these properties is considered to be unique enough to track an individual over the Internet. Examples of such properties are:

Screensize, color depth, pixelratio

Preferred language settings, time zone

Querying installed fonts, querying browser extensions, codecs

Number of CPU cores, memory, heapsize, platform

Permissions of webcam, microphone, GPS

Applepay available? battery status, supported speechSynthesis languages and voices

CDP (Chrome devtools protocol) signatures

And oh so many more properties can be collected

In normal browsers these properties are relatively static and provide a good basis for a persistent fingerprint. But, fraudsters rotate these values, or buy collected fingerprints from real people and simply apply those values in their browsers to match someone else’s fingerprint. This makes their a browser instance able to resemble many many different users.

Fraud detection will of course look at how coherent and logically the collected data is. If a declared iPhone doesn’t support Applepay but does have a Chrome object that’s a big red flag. These basic checks are still necessary and will flag amateuristic fraudsters, just as bots forgetting to spoof their TLS fingerprint.

About a year ago (Apr 2023) Google implemented a new headless mode in Chrome. Prior to that update the fingerprints of a headless and headful browser were quite distinct. But the new mode resembles the headful mode except the value of the property navigator.webdriver. If it returns the value true it is a browser controlled by software, if false a human is interacting with the browser. But, as you can imagine this value will be spoofed and returns false. Every fraudster and their mother knows this!

One of the ways to detect browser automation in this new headless mode is to see whether the chrome driver is enabled and communicates with the browser. The scraping world's answer has been to create ways to remote control a Chrome browser without the driver [17]. This technology can also be used to load pages with advertisements, interact with landing pages, etc.

Unfortunately, you don’t catch professional fraudster with reading properties, because they know exactly what to patch and how to patch or simply bypass it. To catch the professionals you need to go to the next level: Challenge / Response detection.

JavaScript Class 2: Challenge / Response

In security there are three common forms of authentication. Something you know, something you have and something you are. Reading properties (class 1) using JavaScript can be seen as ‘something your browser knows’, though unlike passwords the properties in a browser aren’t secret but change from device to device. The ‘something you have’ can be seen as an authentication cookie, access token, etc. The ‘something your hardware + browser is’ is similar to a challenge / response (class 2).

The challenge is to write a piece of code that can be run on every browser and generates different output based on the configuration of the browser, operating system and hardware. Different systems with the same Operating System (OS) and hardware will generate the same responses per challenge. Because the challenge is randomized it is hard -or near impossible- to predict the associated response.

Figure 10 shows the implementation flow of the JavaScript challenge/ response. The JavaScript will execute a test that depends on the configuration of the OS and/or type of hardware. At (1) the server generates a unique JavaScript that is sent to the browser. The browser needs the OS and/or hardware to execute this task (2). Based on the how the browser has implemented this, the configuration of the device, and the available hardware an answer is returned (3). That answer is captured and conveyed to the server that generated the challenge. In this scenario there is no right or wrong, an answer will always be generated and returned. Whether the response can be associated to known bots, normal browsers that’s up to the fraud detection engine.

An example: Different operating systems have different fonts installed. Some fonts are available on Mac, some on Windows, etc. As fonts are vector based they are rendered by the browser on the spot. This means that different browsers might render the same font differently, the availability of a glyph [14] in that font, and the font fallback when the requested font is not available on the device.

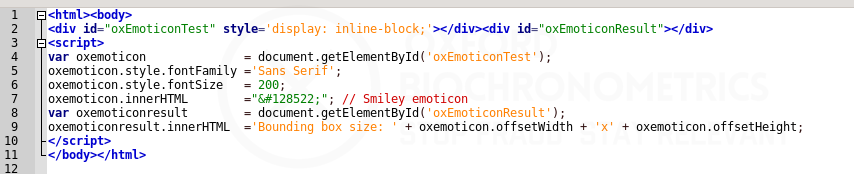

This becomes apparent when rendering emoticons. If available, the emoticon is rendered, if not a box is rendered. It depends on the browser and available fonts whether a full color, simple color or black/white version is rendered. The code example in Figure 11 shows how the unicode character 128522 is displayed in a browser using the Sans Serif font. Subsequently, the size of the bounding box is displayed as width x height below the emoticon. This challenge can be randomized by changing the font, fontsize and the emoticon.

Would such a simple piece of code generate different output using different browsers? Yes, it does! The test doesn’t even count the number of colors used, or calculate a hash of the emoticon. Figure 12 shows how different this single smiley is rendered based on the few devices and browsers I have. At scale, looking at millions and millions of devices, these tests will show what is human, what is a bot, or is an outlier and needs further inspection.

Another example of challenge / response tests is Google’s Picasso: Lightweight Device Class Fingerprinting for Web Clients [11]. This research shows that by looking at the individual pixels when different browser versions render the exact same shapes (the challenge) and how the results differ per browser engine and version (the response). Based on the response the verification solution knows exactly which browser and engine version did run the test, which can be checked against the provided user agent. The next level to this type of test is to include the differences per GPU in the mix. And as we all know scaling different types of hardware is expensive.

The fraudster’s answer to this type of tests has been to stop spoofing user agents and use the browser associated to the reported user agent. Keep this version up to date which makes you blend in with what everybody is using. But, their problem is the variety of hardware. As they typically run their browsers in the cloud, preferably in containers, they use the cloud's hardware. This means they're caught between a rock and a hard place and to prevent detection randomization of the challenge/ response result has been the answer. This means each fraud detection running in their browsers becomes an outlier. To prevent false positives outliers cannot be blocked/ flagged blindly; but can only be blocked using rate limiting rules way above normal human thresholds.

The last few years this has been a whack-a-mole – cycle of updating detection by fraud detection companies and subsequently patching their browsers by fraudsters to avoid detection, etc. The benefit of forcing fraudsters into this corner is that the browser based solution doesn’t scale well at very low costs. Using rate limiting per collected fingerprint per IP address and/or IP subnet forces fraudsters to use proxies which is again increasing costs.

JavaScript Class 3: Capturing human interaction behavior

The upper part of the marketing funnel, ie. pre-bid, impressions, clicks on impressions, does not have much human interaction. At most some visibility (in viewport/ out of viewport) data can be collected and hopefully a click.

The lower in the funnel the more the prospect will interact. At the landing page you expect the prospect to read about your product and once convinced the lead generation form is filled out. Filling out a form will have a lot of user interaction: clicking, typing, touching, zooming, etc.

Behavioral analysis =/= Behavioral statistics

Counting the number of clicks, or the click:scroll ratio, avg mouse speed per second, mouse acceleration, mouse trajectory distance, etc. is creating a series of behavioral statistics. This isn’t capturing the underlying behavior. It’s like measuring and trying to classify a chess game by measuring the distance each piece has made over the board: it says nothing about the strategy of the game and its outcome. Using these simplified behavioral statistics is prone to false positives.

Behavioral analysis goes much further than flattening and averaging behavioral data. This is the area where Oxford Biochronometrics excels, and where the company name comes from: Bio = life, Chronos = time, Metrics = measuring stuff. Measuring (human) behavior over time. Automated behavior from a bot over time differs completely from human behavior. Human operated fraud also differs from regular human behavior.

Compare it with simple handwriting differences. Where typewriters are the bots and handwriting belongs to a human. The difference between the two is like day and night. The differences between human operated fraud and regular human usage are more subtle. Showing these differences would be a long post on its own and beyond the goal of this article. If there’s enough demand for such a post, I’ll might write an article about OxBio’s former behavioral analytics models, let me know in the comments.

Final words

Your takeaway would be to realize ad fraud exist and is a lot more than the reported <1% simply because to fraudsters it's lucrative and almost risk free.

The opposite of ‘ignorance is bliss’ is: Knowledge is power. Having knowledge means being in control.

In prebid, CTV, streaming, TLS fingerprinting is a must in order to prevent fraudsters to scale easily and massively like that dude streaming his own AI songs. But, the pre-bid filtering, ad verification or fraud detection will at minimum have to collect and validate these fingerprints. Though, firing requests using modified versions of cURL are possible, there are other techniques that would add layers of detection, eg. TCP fingerprinting and still enable quality detection of fraud.

By educating marketers and other interested folks the foundations of fraud detection, ie. What types of detections exist, what is possible to achieve and what is not possible, is the first step for brands to become more resilient. You don’t have to fully 100% understand everything in this post and know how to do this yourself. As long as you know whom to invite to assess the vendors technically and ask difficult but necessary questions during the ad fraud vendor selection process, you’re safer than believing the sales representatives of those vendors.

Next article in this series will be about how analytics is used to make the detection actionable.

Corrections? Suggestions? Questions? Feel free to comment, connect, or DM

Appendix

Links mentioned in the article. They are not listed in order of appearance.

[1] https://iabtechlab.com/wp-content/uploads/2022/04/OpenRTB-2-6_FINAL.pdf

[2] https://support.google.com/admanager/answer/6123557?hl=en

[3] https://helpcenter.integralplatform.com/article/publisher-verificatiohttps://support.google.com/admanager/answer/6123557?hl=en

[3] https://helpcenter.integralplatform.com/article/publisher-verification-implementation-guide

[4] https://protobuf.dev/overview/

[5] https://docs.prebid.org/prebid/prebidjs.html

[6] https://docs.prebid.org/formats/ctv.html

[7] https://files.prebid.org/docs/Prebid_for_CTV-OTT.pdf

[8] https://docs.prebid.org/download.html

[9] https://www.nytimes.com/2024/09/05/nyregion/nc-man-charged-ai-fake-music.html

[10] https://en.wikipedia.org/wiki/List_of_typefaces_included_with_Microsoft_Windows

[11] https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/45581.pdf

[12] https://daniel.haxx.se/blog/2022/09/02/curls-tls-fingerprint/

[13] https://github.com/lwthiker/curl-impersonate

[14] https://en.wikipedia.org/wiki/Glyph

[15] https://jasonzweig.com/three-ways-to-get-paid/

[16] https://www.emarketer.com/press-releases/2024-political-ad-spending-will-jump-nearly-30-vs-2020/

[17]

[18] https://iabtechlab.com/software/iababc-international-spiders-and-bots-list/